The Cost of Poor Data Labeling: How Errors Impact AI Model Performance

Data labeling is a fundamental step in the creation of AI and machine learning (ML) systems. However, when this process is mishandled, it can derail even the most advanced AI projects. Poor data labeling results in inaccurate training datasets, which can have far-reaching consequences for model performance and reliability.

Understanding the Ripple Effect of Poor Data Labeling

At its core, poor data labeling disrupts the delicate balance required to train AI systems effectively. Errors in annotation don’t just affect isolated parts of the dataset—they propagate through the model's entire workflow, leading to flawed predictions, increased costs, and lost opportunities.

The Consequences of Poor Data Labeling

- Compromised Model Accuracy

- What Happens: Incorrect or incomplete annotations confuse the AI model, reducing its ability to learn patterns accurately.

- Example: In autonomous vehicles, mislabeled traffic signs could lead to dangerous misinterpretations, such as treating a "Stop" sign as "Yield."

- Propagation of Bias

- What Happens: Labeling errors introduce biases that can distort model predictions. If the dataset reflects societal or systemic biases, the model amplifies them.

- Example: A hiring algorithm trained on biased resume data may unfairly favor specific demographics.

- Financial Costs of Rework

- What Happens: Fixing flawed datasets requires re-labeling, retraining, and additional quality checks, all of which consume resources.

- Example: A retail company may spend thousands re-annotating image datasets after realizing that products were incorrectly categorized.

- Delayed Deployment

- What Happens: Models trained on flawed datasets require significant debugging, delaying their time-to-market.

- Example: An NLP chatbot with poorly annotated intent data will require extensive testing and corrections, slowing launch timelines.

- Loss of Trust and Reputation

- What Happens: Users lose faith in systems that deliver inconsistent or inaccurate results.

- Example: A healthcare diagnostic tool trained on poorly labeled X-ray images may fail to detect critical conditions, eroding trust in AI-powered medical solutions.

Key Factors Leading to Poor Data Labeling

- Inadequate Training: Unskilled annotators may misunderstand the requirements, leading to errors.

- Ambiguous Guidelines: Lack of clear instructions for labeling tasks results in inconsistencies.

- Volume Pressure: Large-scale projects rushed under tight deadlines often compromise on quality.

- Insufficient Quality Control: Skipping review processes allows mistakes to go undetected.

How to Mitigate the Risks of Poor Data Labeling

- Develop Clear Annotation Guidelines

- Provide detailed instructions with examples to ensure consistency across annotators.

- Employ Experienced Annotators

- Work with trained professionals or outsourcing partners who understand the nuances of your project.

- Leverage Technology

- To enhance accuracy and speed, use AI-assisted tools for pre-labeling.

- Implement Rigorous Quality Assurance

- Conduct multiple rounds of reviews and audits to catch and fix errors early.

- Iterate and Improve

- Establish a feedback loop for annotators to continuously refine the process.

The Hidden Costs of Poor Data Labeling

- Missed Business Opportunities

- Impact: Models fail to perform in real-world scenarios, limiting their ability to solve critical business problems.

- Example: An e-commerce platform using flawed product recommendations may see a decline in sales.

- Increased Liability

- Impact: Faulty AI systems can lead to legal and financial repercussions, especially in regulated industries like healthcare and finance.

- Example: A misdiagnosis by an AI-driven medical system could lead to lawsuits and hefty fines.

- Ethical Implications

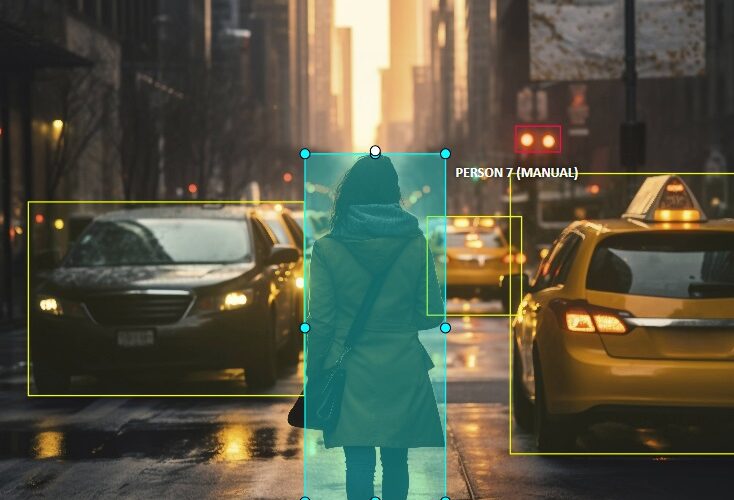

- Impact: Poorly labeled datasets risk perpetuating harmful stereotypes or discrimination.

- Example: A biased facial recognition system could contribute to wrongful arrests or exclusions.

A Case for High-Quality Data Labeling

Investing in accurate and consistent data labeling isn't just a technical requirement; it's a strategic one. High-quality annotations:

- Improve model performance and reliability.

- Reduce the need for costly rework.

- Build user trust and regulatory compliance.

- Enable models to scale and adapt across diverse environments.

Why Partner with Outline Media Solutions?

Outline Media Solutions specializes in delivering top-tier data labeling services tailored to meet the needs of diverse industries. With a team of skilled annotators, cutting-edge tools, and stringent quality assurance processes, we ensure your datasets are AI-ready. Our commitment to precision, scalability, and confidentiality makes us the ideal partner for your AI projects.

Ready to elevate your AI models? Connect with Outline Media Solutions today to ensure your data labeling processes are error-free and impactful.

Comments

Post a Comment